Lost in the Middle: Placing Critical Info in Long Prompts

Stop losing facts in long LLM prompts. Learn placement rules, query ordering, retrieval tactics to boost accuracy and cut costs.

Generative AI models can handle hundreds of pages of text—but here's the thing: that doesn't mean they actually use it effectively. Even with these massive context windows we keep hearing about, we still don't fully understand how robustly language models process information that's spread across long inputs. Do they integrate details evenly? Or does position actually matter?

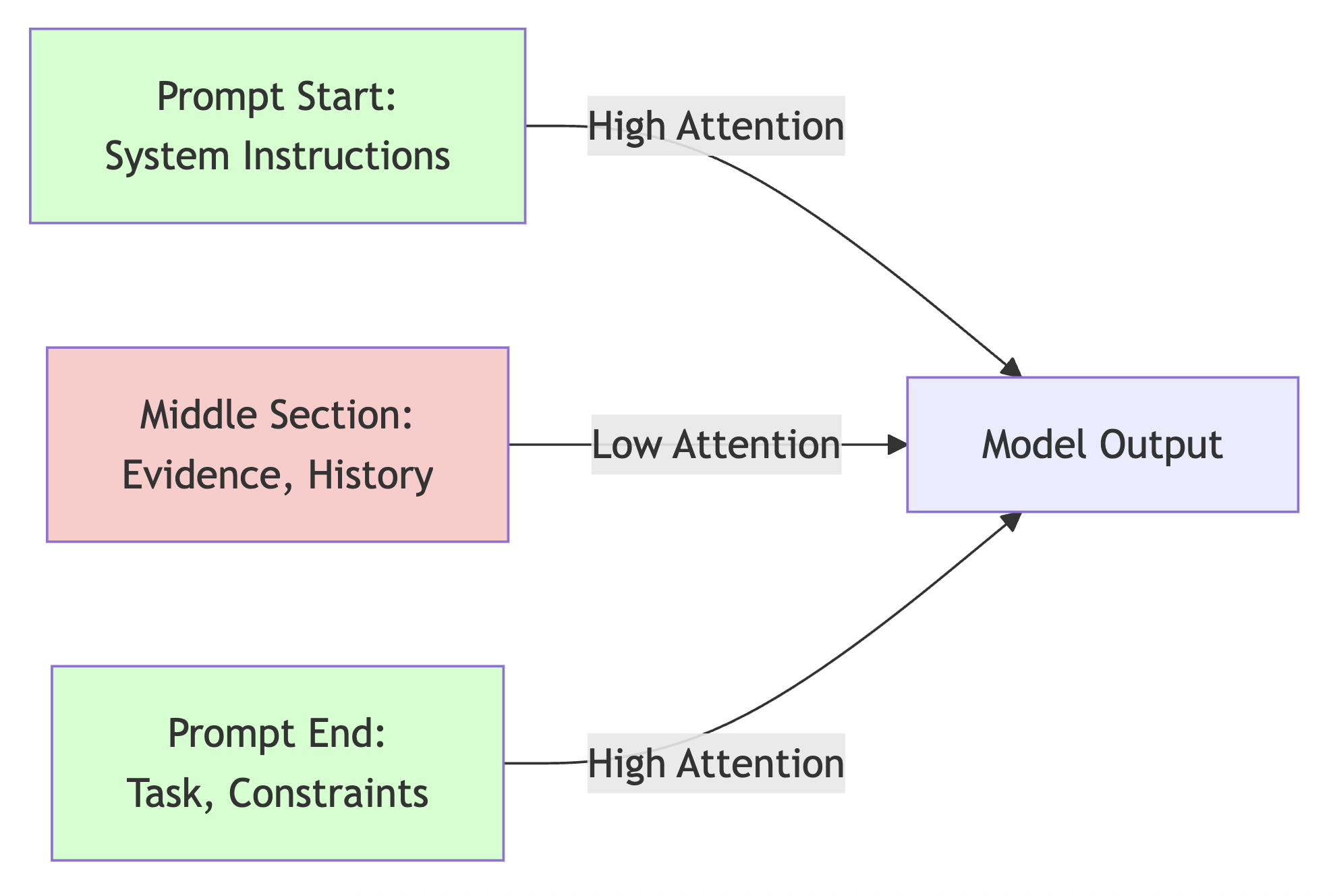

Well, it turns out position matters. A lot. Models tend to favor the beginning and end of inputs, often completely ignoring critical details buried somewhere in the middle—what researchers call "Lost in the Middle." This U-shaped recall pattern is fascinating and frustrating in equal measure. Simply moving a key instruction from the center to the top or bottom can flip your output from wrong to correct, even when the logical content is exactly the same.

For those of us building production AI systems, this isn't just some academic curiosity—it's a genuine reliability risk. If your RAG pipeline stuffs evidence into the middle of a long context, or your agent accumulates conversation history without re-anchoring instructions, accuracy will degrade. And here's the kicker: it happens silently. Understanding how models actually use long contexts—and how to structure prompts to counteract their positional bias—is essential if you want to build systems that perform consistently at scale.

Let me walk you through why position bias happens in decoder-only models, when you should expect it, and what you can actually do to mitigate it in production systems.

Why This Matters

Lost-in-the-middle isn't just some quirk—it's actually a structural artifact of how decoder-only transformers process sequences. When you retrieve ten documents, concatenate them together, and then append a question at the end, the model's attention mechanism naturally gravitates toward the boundaries. Facts sitting in documents 4 through 7? They get systematically ignored, even if they're the most relevant ones you've got.

Impact on real systems:

RAG pipelines return the correct chunks but then generate answers from the wrong ones

Long chat histories cause the model to forget mid-conversation context

Multi-document summarization drops key points from the middle of the input

If you're processing long legal, medical, or technical documents, running chatbots with extensive histories, or aggregating multi-document contexts, you should expect mid-content loss. The pattern is remarkably stable across decoder-only LLMs and, frustratingly, persists even with relative position schemes like RoPE. For a broader look at how accumulated context can degrade model performance, check out our article on context rot and why LLMs "forget" as their memory grows.

How It Works

1. Decoder-only models attend left-to-right with boundary bias

In a decoder-only architecture, each token attends to all the tokens that came before it. When you place your question at the end (which, let's be honest, feels natural), the model has to traverse the entire context to condition its answer. What happens? Attention mass concentrates on those first few tokens—where instructions and framing typically live—and the last few tokens, which is the question itself. Middle content receives less attention weight, even when it's semantically the most important part.

2. Training data encodes positional priors

Here's something I found particularly interesting: most pretraining corpora naturally place key information at document boundaries—titles, abstracts, conclusions. The model learns, quite reasonably, that beginnings and endings are high-signal zones. During inference, this prior sticks around: the model expects important facts near the edges and basically discounts mid-context material.

3. Long context windows don't fix the problem

You might think extending context to 128k or 200k tokens would solve this. It doesn't. Sure, it prevents truncation, but it doesn't rewire attention priorities. Without structural fixes—proper ordering, chunking, reranking—the model still under-attends to mid-context content. It'll hallucinate or just default to early summaries. If you're trying to figure out which model best fits your application, including how context length impacts reliability, our guide on how to choose an AI model for your app offers some practical advice.

4. Encoder-decoder architectures reduce but don't eliminate bias

Encoder-decoder models can attend bidirectionally in the encoder, which does reduce some position sensitivity. But—and this is important—if you dump huge context into the encoder without any structure, you still get boundary bias. Don't treat architecture as a magic bullet that replaces good prompt and retrieval design. For more on when to use small versus large language models and the tradeoffs involved, take a look at our article on small vs large language models and when to use each.

What You Should Do

1. Place the question first

This one's simple but effective: move your query to the beginning of the prompt, before the retrieved context. It anchors attention on the task and shortens the distance between the question and evidence. In practice, structure your prompts like this:

Question: [user query]

Context:

[document 1]

[document 2]

...

This simple reordering can improve recall on long-context QA tasks by reducing the attention path length from question to relevant facts. Honestly, it's one of those changes that seems too simple to work, but it does.

2. Rerank retrieved chunks by relevance

Retrieval systems often return documents in arbitrary order or just score-descending order. Here's what you should do instead: rerank them so the most relevant chunks appear at the beginning and end of the context window, right where attention is strongest. Use a cross-encoder reranker or even a lightweight scoring model to reorder before concatenation.

Here's a minimal reranking flow using a cross-encoder:

from sentence_transformers import CrossEncoder

model = CrossEncoder('cross-encoder/ms-marco-MiniLM-L-6-v2')

query = "What are the indemnity terms?"

chunks = retrieve_chunks(query, top_k=10)

# Score each chunk against the query

scores = model.predict([(query, chunk) for chunk in chunks])

# Sort by score descending and place top chunks at boundaries

ranked = [chunk for _, chunk in sorted(zip(scores, chunks), reverse=True)]

context = "\n\n".join(ranked[:3] + ranked[-2:]) # top 3 + last 2

This ensures your high-signal content sits exactly where the model naturally attends.

3. Chunk documents to control context size

Break those long documents into short, semantically coherent chunks—I usually go with 200 to 500 tokens. Retrieve only the most relevant chunks instead of concatenating entire documents. This approach reduces noise, limits mid-context drift, and keeps the total context under the model's effective attention span.

When you're chunking, make sure to preserve logical boundaries—paragraphs, sections, clauses—so each chunk is self-contained and actually interpretable on its own.

4. Test with position-aware probes

Here's something I always recommend: validate your system by inserting a known fact at different positions in the context and measuring recall. A simple needle-in-haystack test works great:

def test_position_bias(model, context_chunks, needle, question):

results = {}

for i, position in enumerate([0, len(context_chunks)//2, -1]):

test_context = context_chunks.copy()

test_context.insert(position, needle)

prompt = f"Question: {question}\n\nContext:\n" + "\n\n".join(test_context)

answer = model.generate(prompt)

results[position] = needle in answer

return results

If mid-position recall drops significantly (and it probably will), apply reranking or query-first ordering.

Conclusion: Key Takeaways

Lost-in-the-middle is a predictable artifact of decoder-only attention and training data structure. Models favor content at prompt boundaries and under-attend to middle sections, even when those sections literally contain the answer you're looking for.

Core mitigations:

Query-first prompts to anchor attention on the task

Reranking to place high-relevance chunks at boundaries

Chunking to limit context size and reduce noise

Position-aware testing to detect and measure bias in your pipeline

When to care:

You retrieve more than 3–5 documents per query

Your prompts exceed 4k tokens regularly

You're seeing hallucinations or missed citations despite correct retrieval

You run multi-turn conversations with long histories

The key is to design your retrieval and prompt structure to work with the model's attention biases, not against them. Once you accept that this is how these models work, you can build around it pretty effectively.